Security Strategies for the Internet

Info: 7603 words (30 pages) Dissertation

Published: 16th Dec 2019

Tagged: Cyber SecurityInternet

Table of Contents

1. The World Wide Web………………………………………

1.1. URL, URI and URN…………………………………….

1.2. HTML……………………………………………….

1.3. HTTP……………………………………………….

1.4. Browsers……………………………………………

1.5. Virtual Hosting……………………………………….

2. DNS……………………………………………………

2.1. Why DNS Security is important?…………………………

2.2. DNS Firewall…………………………………………

2.3. DNS Software Threats…………………………………

3. Apache Server…………………………………………..

3.1. Apache Configuration………………………………….

3.2. Apache Directives and Modules…………………………

4. Security………………………………………………..

4.1. SSL………………………………………………..

4.2. HTTPS………………………………………………

4.3. SSH Keys……………………………………………

4.4. Firewalls…………………………………………….

4.5. VPNs and Private Networking…………………………..

5. MYSQL Server…………………………………………..

6. EVALUATION……………………………………………

7. Conclusion……………………………………………..

8. List of references…………………………………………

1. The World Wide Web

The World Wide Web or WWW on the Web is an information space where files and other web resources are specified by Uniform Resource Locators (URLs), interlinked by hypertext links, and be able accessed over the Internet. The British scientist Tim Berners-Lee is the inventor the World Wide Web in 1989. Hypertext and the internet formerly existed at this point but no one have an idea of a route to use the internet to link one document straight to another. He writes down the headmost web browser computer platform in 1990 while hired at CERN in Switzerland. The Web browser was unchained outside CERN in 1991, first to other survey establishment starting in January 1991 and to the general public on the Internet in August 1991.

Tim propose three major technologies that intended all computers could know each other (HTML, URL and HTTP). Furthermore, he made the world headmost web browser and web server.

World Wide Web is a system of Internet servers that assistance especially formatted files. The files are formatted in a markup language called HTML (Hyper Text Markup Language) that support links to other files, as well as photo, audio, and video files.

The world wide web turn on the internet to everyone, not just scientists. It connected the world in a route that was not possible previously and made it much easier for people to get information, communicate and share. It allowed people to share their action and ideas through social networking sites, video sharing and blogs.

1.1. URL, URI and URN

A URL (Uniform Resource Locator), as the name propose, supply a route to determine a resource on the web, the hypertext framework that run over the internet. The URL contains the name of the protocol to be used to access the resource and a resource name. The first section of a URL identifies what protocol to use. The second section identifies the IP address or domain name where the resource is existing.

A URL is the extreme collective kind of Uniform Resource Identifier (URI). URIs are strings of list used to set apart a resource over a network.

URL protocols contain HTTP (Hypertext Transfer Protocol) and HTTPS (HTTP Secure) for web resources, “ftp” for files on a File Transfer Protocol (FTP) server, “mailto” for email addresses and telnet for a session to access remote computers.

URL defined as the universal address of files and other resources on the World Wide Web.

The URL is an address that transmit users to a specific resource online, such as a webpage, video or other files or resource. URL stands for Uniform Resource Locator. A URL is a formatted drawing out series used by Web browsers, email clients and other software to differentiate a network resource on the Internet. Network resources are documents that can be common Web pages, other text files, graphics, or programs. URL strings depend of three sections substrings protocol designation, host name or address and document or resource location.

A URI stands for Universal Resource Identifier is a series of characters that identifies a regional or bodily resource. URI is a string of particle used to differentiate a name or a resource on the Internet. Like consistency enables interaction with exemplification of the resource over a network using set protocols. There are ultimate files about all this, they all accept that URIs are, as the third former opinion, identifiers, that is, names. They identify resources, and oftentimes authorize the user to access exemplification of those resources.

A URN (Uniform Resource Name) is an Internet resource with a name that, unlike a URL, has continual value that is, the proprietor of the URN can count on that somebody else or a program will constantly be able to discover the resource. A repeated problem in using the Web is that Web import is on occasion dynamic to a new location or a new page on the same web. Moreover, ago links are made using Uniform Resource Locators (URLs), they no longer act when content is animated. URN is an Internet resource with a static name that leftover useful even if its data is moved to another place. Not like a uniform resource locator (URL), which cannot act if the content is moved, a URN is constantly able to route the resource of definite data on the Web, moreover, resolving a recurrent case of moving of data.

URN and URL are occasionally wrongly used interchangeably, but in state of a URL, the user should know the location of a particular resource, but the URN just has to know the name of the resource. The user does not have to inform if the resource has been moved or still stay at the same place. Both URL and URN are accessories of a uniform resource identifier, but diverge in syntax and employment. URNs were mostly conceptualized in the 1990s to be a constitutive of a three-fraction information architecture for the Internet. The other two fractions existence URLs and Uniform Resource advantage, which is a metadata frame.

URI = Tells you in which neighborhood you should go to sleep.

URL = Tells you in which home in what neighborhood you should go to sleep.

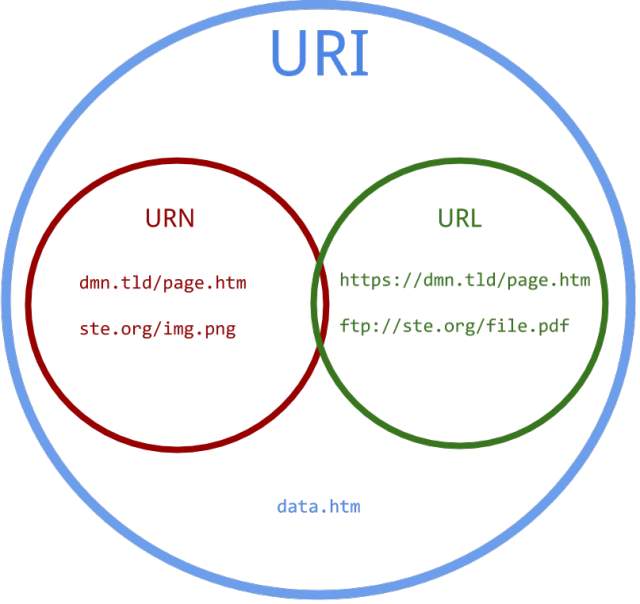

Figure 1 it is describing the deference between URL, URI and URN.

1.2. HTML

HTML is a computer language contrived to Authorizes website creation. These websites can then be sight by anybody else linked to the Internet. It is comparatively simple to impart, with the basics existence attainable to extreme people in one session, and totally sturdy in what it let you to create. HTML known as Hyper Text Markup Language.

HTML depend of a string of shortened symbols typed into a text file by the site writer these are the marks. The text is then saved as a HTML file, and sight through a browser, like Internet Explorer or Netscape Navigator. This browser reads the file and construe the text into an apparent form, then performance the page as the writer had intentional. lettering HTML patrimony using marks correctly to create a vision. It can use anyone from a primeval text editor to a sturdy diagrammatic editor to create HTML pages.

HTML is the set of markup character or letter incorporated in a file meant for display on a World Wide Web browser page. The markup converses the Web browser how to display a Web pages words and images for the user. Each person markup code is referred to as an element. Some dash come in pairs that mark when some display influence is to start and when it is to end. HTML is the primary building prevent of inspire a website. HTML is a very basal markup language and demand memorization of a few dozen HTML commands that temple the look and layout of a web page. Furthermore, writing any HTML, code or styling a first web page, you must resolve on an HTML editor or text editor, such as Notepad or WordPad.

Figure 2 it is describing HTML codes and how HTML language works.

1.3. HTTP

HTTP (Hypertext Transfer Protocol) is the set of principles for convey files, text, graphic images, sound, video, and other multimedia documents on the World Wide Web. Immediately a Web user opens their Web browser, the user is indirectly making use of HTTP. HTTP is an application protocol that hold on upper of the TCP/IP wing of protocols the establishment protocols for the Internet. HTTP it was contagious for connection between web browsers and web servers, but it can also be used for other purposes. HTTP follows a vintage client and server model, with a client opening a link to make a request, then expectation until it receives a response. HTTP is a stateless protocol, concept that the server does not keep any data between two requests. Though although often established on a TCP/IP layer, it can be used on any authoritative transport layer.

Information is reciprocated between clients and servers in the form of Hypertext files, from which HTTP bring its name. Hypertext is construct text that uses reasonable links, or hyperlinks, between nodes containing text. Hypertext files can be edited using the Hypertext Markup Language (HTML). Using HTTP and HTML, clients can instance different kinds of content (such as text, images, video, and application data) from web and application servers that host the content.

HTTP pursue a request response model in which the client makes a request and the server issues a restraint that includes not only the requested content, but also pertinent status information about the request. This self-contained designing allows for the divide nature of the Internet, where a desire or response might pass through many moderate routers and proxy servers. It also allows average servers to execute value added functions such as load balancing, caching, encryption, and squeeze. HTTP is an application layer protocol and reckon on an underlying network-level protocol like Transmission Control Protocol (TCP) to function. HTTP resources like web servers are across the Internet using singular identifiers known as Uniform Resource Locators (URLs).

1.4. Browsers

A web browser is a software program that authorize a user to locate, access, and presentation web pages. In combined usage, a web browser is generally shortened to browser. Browsers are used primarily for displaying and accessing websites on the Internet, as well as different content formed using Hypertext Markup Language (HTML). Browsers interpret web pages and websites transmit using Hypertext Transfer Protocol (HTTP) into epidermal legible content. Moreover, it also can show another protocols and acanthus, like secure HTTP (HTTPS), File Transfer Protocol (FTP), email handling (mailto:), and files (file:). In extension, most browsers also backing external plug-ins required to show active content, such as in-page video, audio and Flash content.

Outwardly browsers, the internet as we know it today would be out of the question. Before the first public graphical browser, Mosaic, was progressive in 1992, the internet was text-based, bland, and required technical information to use. Because of this, the number of people who had the capability and the interest to use the internet was confined. Mosaic helped produce the internet ubiquitous. The graphical interface produces navigating the web easy to comprehend and the ability to display graphics next to the text on websites produce web pages more interesting to browse.

Internet browsers have advanced into powerful material that let you safely and quickly access your preferable websites. Modern internet browsers have many beneficial lineaments. Tabbed browsing, helps open many web pages in single tabs, instead of requiring a resource dense separate window for each page. This is very useful and faster if you want to search a lot of information in less time. The capability to mute sound in single tabs is another useful feature that many modern browsers support.

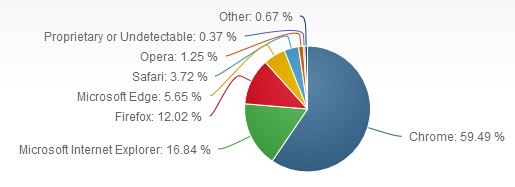

Figure 3 this figure showing the popular browsers in 2017

1.5. Virtual Hosting

Virtual hosting is the saving of Web server hosting services so that a company or single does not have to buy and maintain its own Web server and connections to the Internet. A virtual hosting supplier is occasionally called a Web or Internet space provider. Some companies supply this service simply call it hosting. Typically, virtual hosting supplies a customer who wants a Web site with domain name entry assistance, multiple domain names that map to the entry domain name, an apportionment of file storage and proof setup for the Web site files HTML and graphic image files, e-mail addresses, and, optionally, Web site habit services. The virtual hosting user or the web site owner necessarily only to have a File Transfer Protocol (FTP) program for swap files with the virtual host.

several virtual hosting suppliers make it possible for customers to have more monitoring of their Web site file system, e-mail names, passwords, and other resources and say that they are supplier each client a virtual server that is, a server that evidence to be totally their own server. When a client does in fact want to have its very own server, some hosting suppliers allow the client to rent a dedicated server at the hosting provider’s location. If a customer can place their own purchased equipment at the supplier place, this is known as punctuality. Virtual hosting is also much inexpensive than consecrate hosting. Some webhosting companies will also not produce you sign a yearly contract, so you can alteration hosting companies more easily if necessary. Virtual hosting also gives you more incoming to the technical crew at the webhosting company if you need it. So, however shared web site hosting is fine for websites with less passage, and devote hosting is appropriate for larger companies, virtual hosting is perfect for small ecommerce action with a exhibition amount of traffic.

2. DNS

The Domain Name System manages Internet located by locating domain names and mapping them to IP addresses. DNS, as primarily designed, has no means of determining whether domain name data comes from the licensed domain owner or has been faked. This security weakness drops the system in point of weakness to number of attacks.

DNS takes names and converted to numbers, such as www.appliedengineeringcollege.com, and resolves it to an IP address such as 192.168.1.100, this IP address can be used by TCP/IP to locate and communicate with the in-demand computer. DNS is significant because humans do not save numbers well. We can remember that www.appliedengineeringcollege.com, but we cannot easily remember that to notify with it we need to type http://192.168.1.100 into our web browser. DNS solves that problem for us. Moreover, DNS is just a big divide database. The data is formatted into single records that store information. DNS authorize users and administrators to add records to, manage, and retrieve records from the database it preserves. There are many record types obtainable in DNS, each representing the type and use of the data it stores.

The DNS consists of three components. The first is a Name Space that establishes the rules for creating and construct legal DNS names. The second is a Globally Distributed Database perform on a network of Name Servers. The third is Resolver software which understands how to subedit a DNS query.

Name Space:

It is describing the name domains LTDs such as .com, .org, .net, and .GOV, and the country code TLDs including .SA, .UK, and .US. The names can be as many as 63 particles long, with upper and lower case alphabetical letters, numerals, and symbol frame the complete list of legal characters.

Name Servers:

The second key compound of the DNS is a globally connected network of name servers. Every zone has an individual name server, which is the secure source for the zone resource records. The essential name server is the only server that can be updated by means of local administrative activity. Name servers hold replicated copies of the essential server’s data to supply redundancy and reduce the primary servers. Moreover, name servers mostly cache data they have looked up, which can greatly speed up next request for the same data.

Resolver Cache:

The third strain of the DNS is the resolver. The resolver is a piece of software that’s implemented in the IP stack of each destination point, or host. When a host is configured, manually or through DHCP, it’s assigned at least one default name server along with its IP address and subnet mask. This name server is the first place that the host research to resolve a domain name into an IP address. If the domain name is in the local zone, the default name server can process the request. Otherwise, the default name server queries one of the root servers. The root server replies with a list of name servers that include data for the TLD of the query.

2.1. Why DNS Security is important?

DNS security is important because a defeat in DNS can render an organization completely unreachable and because attackers are actively looking for new ways to take feature of the DNS protocol and the DNS infrastructure itself. DNS is like a phone book for the Internet. If a person’s name but don’t know their telephone number, purely it looks up in a phone book. DNS supply this same service to the Internet. Internet users depend on the DNS to identify the names of websites they want to visit, but browsers convey with websites by their IP addresses. IP addresses are present as a series of numbers separated by dots. DNS is important because it links the domain name to the IP. Internet criminals can take advantage of these weaknesses and can create false DNS records. These fake records can twist users into visiting fake websites and downloading malicious software.

2.2. DNS Firewall

The most common security system is firewall. Firewall is helpful for the person or the company to prevent IP spoofing. Include a help to deny DNS requests from IP addresses outside the company allocated numbers space to prevent names resolver from being exploited as an open reverse in DDoS attacks. Moreover, enable checking of DNS traffic for suspicious byte type or anomalous DNS traffic to block name server software exploit attacks.

Malware has become more advanced and is the main traditional defenses. Traditional protection methods do not object DNS communications to malicious locations.

Infoblox DNS Firewall is the leading DNS based network security solution which contains and controls malware that uses DNS to communicate. DNS Firewall works by using DNS RPZ which is stands for Response Policy Zones, and the optional Infoblox Threat Insight to prevent data. Moreover, collaborating with Infoblox DHCP for device fingerprinting, with Infoblox Identity Mapping for capturing the user name attached to an infected device, and with Infoblox IP address management DNS Firewall provides actionable information to help pinpoint infected devices for handling.

2.3. DNS Software Threats

When DNS is exposing, many things can happen. Expose DNS servers are sometimes used by attackers one of two ways. The first object an attacker can do is follow up all incoming traffic to a server of their choosing. This enables the attacker to launch extra attacks, or collect traffic logs that include sensitive information. The second object an attacker can do is steal all the emails. Furthermore, this second way also allows the attacker to send e-mail, using the domain of the victim organization and take an advantage of their reputation to steal, damage the company and do a lot of problem. Making things worse, an attacker can also choose a third way, which each of these things does. This cyber-attack can could Cache Poisoning Attacks it is also known as DNS spoofing. DNS spoofing is hacker attack where is the data is insert in DNS resolver cache. A single attack on the DNS server affects the users linked to that server. The attacker also uses DNS hacker can find the ID number of the user to poison the cache of the user.

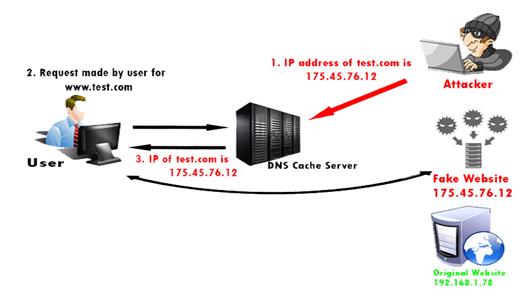

Figure 4 this figure described how does cyber-attack work.

3. Apache Server

Apache is the most widely used web server software. progressing and preserve by Apache Software Foundation, Apache is an open source software obtainable for free. It works on 67% of all webservers in the world. It is fast, authoritative, and secure. It can be extremely customized to meet the needs of many various environments by using extensions and modules. Most WordPress hosting providers use Apache as their web server software. However, WordPress can run on other web server software as well. The Apache HTTP Server Project is a potential to improve and maintain an open-source HTTP server for new operating systems including UNIX and Windows. The aim of this project is to tool up a secure, active and extensible server that provides HTTP services in sync with the current HTTP criterion. The Apache HTTP Server (“httpd”) was started in 1995 and it has been the extreme popular web server on the Internet since April 1996.

Apache Web Server is designed to create web servers that can host one or more HTTP-based websites. memorable features include the ability to boost multiple programming languages, server-side scripting, an authentication technique and database support. Apache Web Server can be boost by manipulating the code base or adding multiple extensions and add-ons. It is also widely used by web hosting companies for providing shared and virtual hosting, as by default, Apache Web Server supports and characterize between different hosts that build on the same machine.

3.1. Apache Configuration

Apache HTTP Server is configured by development directives in plain text configuration files. The main configuration file is commonly called httpd.conf. The position of this file is set at compile-time, but may be overtaken with the -f command line flag. Furthermore, other configuration files may be added using the contain directive, and wildcards can be used to contain many configuration files. Any directive may be placed in every of these configuration files. Changes to the main configuration files are only familiar by httpd when it is started or restarted. The server also reads a file containing simulate document types, the filename is set by the config directive, and is simulate types by default.

The Apache HTTP web server is in many respects in fact standard for general purpose HTTP services. Out of its large number of modules, it supplies flexible support for proxy servers, URL rewriting, and access control. Moreover, web developers often choose Apache for its support of server-side scripting, and establish interpreters. These capabilities facilitate the quick and active execution of dynamic code. However, there are several noted stands by Apache, even within the border of open source, the expansion of Apache usage is unique.

The special degree of flexibility provided by Apache does not come without some cost, this mostly takes the shape of a configuration body that is sometimes confusing and often complicated. Formed this document and several other guides that demand to address this complexity and explore some more advanced and optional functionality of the Apache HTTP Server.

3.2. Apache Directives and Modules

From the mark of view of a system administrator, there are some kinds of directive. These can be in general classified according to their area and authenticity in the configuration files. Some directives are good only for the server, while others apply within an area such as Virtual Host or Directory. Conflicting directives may overtake each other based on specificity. For example, a directive inside a Directory overtake one outside it. In most cases this applies recursively, although this is controlled by single modules whose behavior may diverge.

The standard text supported by Apache are, Main Config Directives show in httpd.conf but not inside any vessel apply globally, except where overtake. This is suitable for setting system defaults types, and for once-only setup like loading modules. Usually directives can be used here. Virtual Host each Virtual host has it is virtual server-wide configuration, set within a Virtual Host vessel. Directives that are good in the main config are also good in a virtual host. Directory the Directory, Files and Location directives realize a hierarchy within which configuration can be set and overtake at any level. This is the most frequent form of configuration, and is good to the virtual hosts. In the benefit of Directory, it is indicated to this collectively as the Directory Hierarchy in this essay. Htaccess is a bonus of the Directory Hierarchy that serves to enable users to set directives for itself.

Alias is one of the must-use directives, as it authorizes you to point your Web server to directories outside your file root. Once set up correctly, any URL ending in the alias will automatically resolve to the route set in the alias. So, you could take a folder, say, /Nasser/sites/docs, which would not normally be accessible by Apache.

4. Security

Different high-profile hacking attacks have confirmed that web security leftover the most critical issue to any business that conducts its operations online. Web servers are one of the most targeted general faces of an organization, because of the sensible data they usually host. Securing a web server is as important as securing the website or web application itself and the network about it. If you have a secure web application and an insecure web server, it still puts your business at a high danger. Your company’s security is as strong as its lowest point. Moreover, securing a web server can be a hard operation and requires specialist expertise, it is not an impossible task. Long hours of research and a lot of coffee also food, can save you from long day at the office, headaches and data breaches in the future.

basically operating system installations and configurations, are not secure. In a typical default installation, many network services which won’t be used in a web server configuration are installed, like remote registry services and print server service. The more services running on an operating system, the more ports will be left open, therefore, leaving more open doors for virulent users to abuse. Switch off all needless services and disable them, so next time the server is rebooted, they are not started automatically. Switching off needless services will also give an extra boost to your server performances, by release some hardware resources. Although nowadays it is not workable, when possible, server administrators should login to web servers locally. If remote access is necessary, one must make sure that the remote connection is secured truly, by using tunneling and encryption protocols. Using security characters and other single sign on equipment and software, is a very good security practice. Remote access should also be restrictive to a specific number of IP’s and to particular accounts only. It is also very important not to use public computers or public networks to access corporate servers remotely, such as in internet school or public wireless networks.

split up servers should be used for internal and external-facing applications and servers for external-facing applications should be hosted on a DMZ or vessel service network to prevent an attacker from exploiting a sensibility to gain access to sensitive internal information. Breakthrough tests should be run on a regular basis to identify potential attack vectors, which are sometimes caused by out-of-date server modules, configuration or coding errors and bad patch management. Web site security logs should be audited on a constant basis and stored in a secure location. Other best practices include using a split up development server for testing and cure, limiting the number of superuser and administrator accounts and deploying an intrusion detection system (IDS) that contains monitoring and analysis of user and system activities, the acknowledgement of style typical of attacks, and the analysis of normal activity style.

4.1. SSL

SSL Secure Sockets Layer is the standard security technology for foundation an encrypted link among a web server and a browser. This link ensures that all data passed between the web server and browsers stay private and integral. SSL is a manufacture standard and is used by millions of websites in the protection of their online transactions with their clients. To be able to make an SSL connection a web server demands an SSL Certificate. When you choose to activate SSL on your web server you will be stimulating to complete several questions about the identity of your website and your company. Your web server then lunches two cryptographic keys, a Private Key and a Public Key. The Public Key does not requirement to be secret and is placed into a Certificate Signing Request CSR, a data documents also containing your details. You should then display the CSR. Through the SSL certificate application process, the certification authority will make sure of the details and issue an SSL Certificate containing the details and allowing to use SSL. The web server will identify issued SSL certificate to the Private Key. The web server will then be able to set up an encrypted link among the website and the clients web browser. The involvement of the SSL protocol stays hidden to the clients. Instead the browsers provide with a key index to know what is the currently protected by an SSL encrypted session, clicking on the lock icon displays the SSL Certificate and the details about it. All SSL certificates are published to either companies or legally accountable individuals.

Typically, an SSL Certificate will include the domain name, company name, user address, user city, user state and it is country. It will also include the expiry date of the Certificate and details of the Certification Authority responsible for the issuance of the Certificate. When a browser link to a secure site it will restore the site’s SSL Certificate and test that it has not expired, it has been released by a Certification Authority the browser trusts, and that it is start used by the website for which it has been released. If it fails on any one of these tests the browser will display a warning to the end user allow it to know that the site is not secured by SSL.

4.2. HTTPS

Hyper Text Transfer Protocol Secure (HTTPS) is the secure narration of HTTP, the protocol over which data is sent between the browser and the website that you are connected to. The ‘S’ at the end of HTTPS stands for Secure. It means all connecting between the browser and the website are encrypted. HTTPS is usually used to protect highly secret online transactions like online banking and online shopping order forms. HTTPS pages typically use one of two secure protocols to encrypt connecting, (SSL) Secure Sockets Layer or (TLS) Transport Layer Security. Both the TLS and SSL protocols use what is known as an asymmetrical Public Key Infrastructure (PKI) system. An asymmetrical system uses two keys to encrypt connecting with a public key and a private key. Moreover, encrypted with the public key can only be decrypted by the private key. As the names propose, the private key should be protected strictly protected and should only be attainable the owner of the private key. In the case of a website, the private key leftover securely ensconced on the web server. Contrariwise, the public key is intended to be distributed to anyone and everyone that necessarily to be able to decrypt information that was encrypted with the private key.

When you demand a HTTPS connection to a webpage, the website will initially send its SSL certificate to the browser. This certificate includes the public key necessarily to begin the secure session. Based on this launch exchange, the browser and the website then launch the SSL handshake. The SSL handshake include the generation of shared secrets to establish a uniquely secure connection between the browser and the website. When a trusted SSL Digital Certificate is used through a HTTPS connection, users will see a padlock icon in the browser address bar. When an extensive validity certificate is installed on a web site, the address bar will turn green.

All communications sent over usual HTTP connections are in plain text and can be read by any hacker that manages to smash into the connection between the browser and the website. This come with a clear danger if the communication is on an order form and contains the credit card details or social security number. With HTTPS communications, all connection is securely encrypted. It means that even if someone managed to smash into the communications, they would not be able decrypt any of the data through between the user and the website.

The major advantage of a HTTPS certificate is, client’s information, like credit card numbers, is encrypted and cannot be intercepted, guests can verify you are a registered business and that you own the domain and clients are more likely to trust and complete buy from sites that use HTTPS.

4.3. SSH Keys

SSH keys are a twain of cryptographic keys that can be used to trust to an SSH server as an alternative to password-based logins. A private and public key pair are created before to authentication. The private key is stayed secret and secure by the user, while the public key can be shared with anyone. To configure the SSH key authentication, you must place the user’s public key on the server in a unique directory. When the user connects to the server, the server will ask for clue that the client has the associated private key. The SSH client will use the private key to reply in a way that demonstrate ownership of the private key. The server will then allow the client to connect without a password. With SSH, any type of authentication, including password authentication, is completely encrypted. However, when password-based logins are allowed, virulent users can over and over attempt to access the server. With modern computing power, it is possible to obtain entry to a server these attempts and trying set after set until the right password is found. Setting up SSH key authentication let you to disable password-based authentication. SSH keys mostly have many more bits of data than a password, meaning that there are significantly more possible set that an attacker would have to run through.

Many SSH key algorithms are theorize uncrackable by modern computing hardware simply because they would require too much time to run over possible matches. SSH keys are very easy to set up and are the recommended route to log into any Linux or Unix server environment remotely. A pair of SSH keys can be created on the machine and you can transfer the public key to your servers within a slight of minutes. The SSH protocol backing many authentication methods. One of the most important of these is public key authentication for reacting and automated connections. In addition to security public key authentication also offers usability advantage, it allows users to execute single sign-on across the SSH servers they connect to. Public key authentication also allows automated, password less login that is a key enabler for the uncounted secure automation processes that execute within enterprise networks globally. Public key cryptography revolves around a pair of key concepts.

4.4. Firewalls

A firewall is a segment of software or hardware that controls what services are uncovered to the network. This means blocking or enclose access to every port except for those that should be publicly available. On a typical server, a number services may be racing by default. These can be categorized into the following collections, Public services that can be accesses by anyone on the internet, sometimes unknown. A perfect example of this is a web server that may allow access to the site. Private services that should only be accessed by a select group of authorized accounts or from certain sites. A symbol of this may be a database control panel. Internal services that should be attainable only from within the server itself, without compromise the service to the outside world. This may be a database that only allows local connections. Firewalls can ensure that access to the software is limited according to the categories wrote before. Public services can be left open and obtainable to clients and private services can be restricted based on different criteria. Internal services can be made totally inaccessible to the outside world. For ports that are not being used, access is blocked fully in most configurations.

Firewalls are a primary part of any server configuration. Even if the services it selves implement security features or are limited to the interfaces it would like to run on, a firewall serves as an additional layer of protection. A properly configured firewall will record access to everything except the services that need to stay open. Exposing only a few segments of software decrease the attack surface of the server, limiting the components that are vulnerable to utilization. There are several firewalls available for Linux systems, some of which have a steeper learning detour than others. In general, though, setting up the firewall must only take a few minutes and will only requirement to happen during the server’s initial setup or when it makes changes in what services are offered on the computer.

As attacks versus web servers became more mutual, it became visible that there was a necessity for firewalls to protect networks from attacks at the application layer. Packet filtering and stateful search firewalls can not recognize between valid application layer protocol requests, data and bad traffic encapsulated within apparently valid protocol traffic. Firewalls that provide application layer filtering can examine the payload of a packet and recognize between valid demands, data and bad code disguised as a valid request or data. Since this type of firewall makes a decision based on the content, it gives security engineers more valid control over network traffic and sets rules to statement or deny specific application requests or commands. It can allow or deny a particular incoming Telnet command from a specific user, while other firewalls can only control public incoming requests from a specific host.

4.5. VPNs and Private Networking

Private networks are networks that are only obtainable to confirmed servers or users. Private networking is obtainable in some zone as a data center wide network. A VPN, or virtual private network, is a route to make secure connections among remote computers and present the connection as if it were a local private network. This tool up a route to configure the services as if they were on a private network and link is remote servers over secure connections. Utilizing private instead of public networking for internal communication is almost constantly chosen specific the choice among the two. However, since other clients within the data center are able to access the same network, it still must perform extra measures to secure communication between the servers. Using a VPN is, effectively, a route to map out a private network that only the servers can see it. Communication will be completely private and secure. Other applications can be configured to through their traffic over the virtual interface that the VPN software disclose. This way, only services that are meant to be consumable by users on the public internet necessity to be exposed on the public network.

Utilizing private networks in a datacenter that has this ability is as simple as enabling the interface through the server’s creation and configuring the applications and firewall to use the private network. The data center wide private networks share space with other servers that use the same network. VPN, the fisrt setup is a bit more embroiled, but the increased security is worth it for most use cases. Every server on a VPN should have the shared security and configuration data necessary to establish the secure connection installed and configured. After the VPN is up and running, applications should be configured to use the VPN tunnel. VPN is a technology that creates a secure and encrypted connection over a less secure network, such as the internet. VPN technology was developed as a route to authorize remote clients and branch offices to securely access corporate applications and other resources. Data travels through secure route and VPN users should use authentication methods like including passwords, tokens and other unique identification methods to earn access to the VPN. VPN performance can be affected by a variety of factors, between the speed of users internet connections, the types of protocols an internet service supplier may use and the type of encryption the VPN uses. Performance can also be affected by bad quality of service and situation that are outside the control of IT.

Table of Figure

Figure 1 it is describing the deference between URL, URI and URN.

Figure 2 it is describing HTML codes and how HTML language works.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Internet"

The Internet is a worldwide network that connects computers from around the world. Anybody with an Internet connection can interact and communicate with others from across the globe.

Related Articles

DMCA / Removal Request

If you are the original writer of this dissertation and no longer wish to have your work published on the UKDiss.com website then please: