Stealth Assessment in Educational Games: An In-depth Literature Review

Info: 7912 words (32 pages) Example Literature Review

Published: 9th Dec 2019

Stealth assessment in educational games: An in-depth literature review

Abstract

Teaching and learning through games has been a subject of interest to educators for a long time. With the relatively recent explosion of the popularity of video games, many have sought to capitalize on student interest in games and encourage players to focus their time and attention on games that will teach them about a subject. Despite the massive market that this effort has spawned, games for learning still occupy a very small portion of the gaming market and traditional teaching methods are still preferred by many instructors to game based methods. This is likely because many developers of learning games do not take the time or effort to understand the motivational factors behind entertainment games or the motivational factors of learning, leading to games that players abandon quickly. This problem is compounded by a lack of assessment methods for such games. This paper conducts an expansive literature review of game based learning and stealth assessment. This paper also examines critical areas that current literature does not cover sufficiently and discusses how further work could solve these issues.

Stealth assessment in educational games: An In Depth Literature Review

Definition of Terms

Epistemic Games are a type of serious game that is based on experiential learning theory (Rupp, Gushta, Mislevy, & Shaffer, 2010).

Game Based Learning is a kind of game play in which the goals of game match specific learning outcomes (Van Eck, 2006).

Game Mechanic is a gameplay element that a player uses as a means of interacting with the game world and achieving the goals of the game (Baron & Amresh, 2015).

Serious games are video games that are intended to inform, teach and train players first and foremost rather than entertain like regular video games (Ritterfeld, Cody & Vorderer, 2009).

Stealth assessment is a kind of learner’s assessment woven deeply and invisibly into the fabric of the game (Shute, 2013).

Work product usually consists of a transcript of player activities and the system state at the end of a task (Kim, Almond, & Shute, 2016).

Introduction

Video games as a form of entertainment have exploded in popularity with some players logging copious amounts of play time on a weekly basis (Williams, Yee & Caplan, 2008). Many parents view their child’s obsession with video games as a barrier to their education (Bloom, 2009). However, there are many in the education field who are looking to harness the popularity of video games for learning purposes. Video games make use of a variety of techniques to both engage the player and to teach them how to play. Many of these techniques are applicable to actual learning and training. Educators are often searching for new methods to deliver content to students, and this becomes more important as general pedagogical techniques shift to account for the new media driven way in which many people learn in today’s world (Eitel & Steiner, 1999). This has led to a massive market for educational games. The video game industry itself was considered to be worth twenty billion dollars in 2014 (Entertainment Software Association, 2015), and the educational games market alone was worth twenty million dollars in 2007 (Susi, Johannesson & Backlund, 2007). This makes educational games an attractive field for businesses wanting to tap into the market, and the flood of learning games is attractive to educators looking for innovative ways improve learning. Serious games also provide new data mining and collection opportunities not normally possible or easy with laboratory studies or other forms of media (DiCerbo, 2014).

Even though serious games and regular video games have a different central focus, they are similar enough that most principles that apply to development of regular entertainment games also applies to serious games. For this reason, a large number of the developers of commercially available serious games follow well-established design paradigms for entertainment games without paying careful attention to the key differences one must account for when designing a serious game. Partly because of these issues, serious games have yet to take a central role in classrooms despite the massive market and interest in their use for teaching (Blanco et al., 2012). There are also issues with the development of entertainment games that carry over to serious games. While it is clear that players are willing to spend a great amount of time on games usually with no tangible reward such as money (Williams et al., 2008), it is not clearly understood why players are motivated to spend so much time playing their games (Kong, Kwok & Fang, 2012). It is important then to consider motivation, and the role it plays both in video games and in learning. By understanding this role, it may become easier to further motivate a player within learning games by incorporating motivation theory directly into design techniques. Proper design is also a subject of importance, as video games are a relatively new media form and there is great debate regarding design and classification of games even within the entertainment sector (Apperley, 2006).

Despite the large and profitable market for serious games (Van Eck, 2006), there remains the troubling central question as to the effectiveness of such games in matching student outcomes to learning objectives (Bellotti, Kapralos, Lee, Moreno-Ger & Berta, 2013). While there have been several studies on the subject, most rely on qualitative data to draw their conclusions. There is currently no widely accepted methodology, system or framework for people to quantify and evaluate learning gain data with respect to serious games. This makes evaluating these games difficult and helps explain why educators have been slow to adopt them in the classroom.

Epistemic games

Shaffer’s epistemic games (2006), leverages professional practices to create contextualized play (‘epistemic frame’) that approximate the ways professionals learn through reflective practice (Schön, 1983). Epistemic frame describes how participants in a practice develop shared ways to view the world (Gauthier & Jenkinson, 2015). Understanding it helps a designer avoid confounding the mastery of isolated tasks with functional mastery of work in a domain (Shaffer 2006). It is not simply “the content” of the domain that matters but how people think with that content, what they do, and the situations in which they do it. Epistemic games facilitate players to think and act like domain specific experts (e.g., you are a civil engineer and have to examine a fake building presenting with a specific set of defects), leading to player’s growth in skills, knowledge and identities (Vj Shute & Kim, 2011). They mimic the real-world, allowing learners to have rich experiences of their domain-specific subjects by connecting the knowledge-in-context to knowledge-in-action (Vahed and Singh, 2015). They also aid the emergence of disciplinary thinking and acting which can be transferred to other contexts.

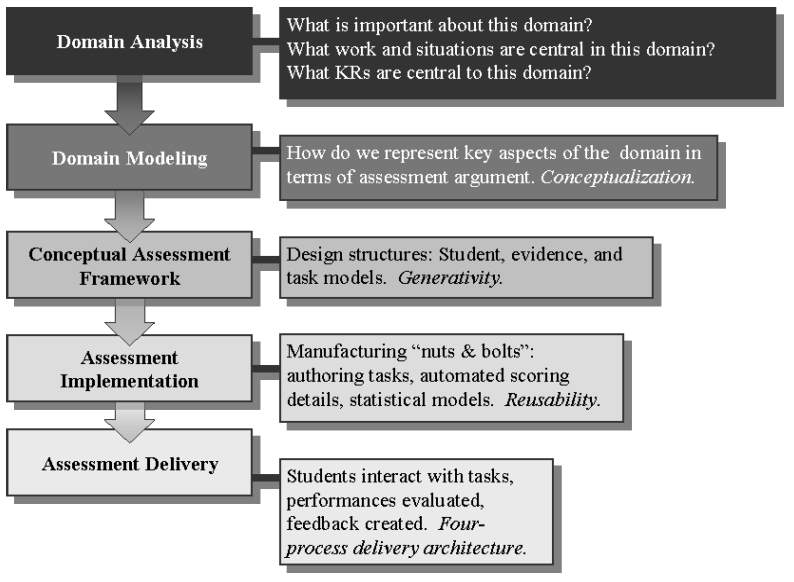

Evidence-centered Design

Evidence-centered design (ECD) was created by Mislevy et al. (2003) to support the assessment developers in making any kind of assessment. It helps them explicate the rationales, choices, and consequences reflected in their assessment design (Rupp, Gushta, Mislevy, & Shaffer, 2010). ECD is a comprehensive framework for describing the conceptual, computational and inferential elements of educational assessment and is very suitable for the development of the performance-based assessments which are created in the absence of easily definable test specifications (Mislevy, Behrens, Dicerbo, & Levy, 2012). Figure 1 depicts five ECD “layers” at which different types of thinking and activity occur in the development and operation of assessment systems. Each layer is composed of representations, components and processes which are appropriate for the activities that occur in that layer. Table 1 summarizes these layers in terms of their roles, key entities (e.g., concepts and building blocks), and the External Knowledge Representations that assist in achieving each layer’s purpose.

Domain Analysis Layer. It is the first layer of the ECD framework and lays the foundation for the later layers. It mobilizes representations, beliefs and modes of discourse for the target domain. How people think, what they do, and the situations in which they do it matters as much as “the content” of the domain. Knowledge, skills, and abilities that are to be inferred, student behavior the inference is based on, and the situations that will elicit those behaviors are defined here(Mislevy et al., 2010). Identification of the external knowledge representations is the critical part of domain analysis. Insights about the domain are organized by the assessment developers during domain analysis in the form of assessment arguments.

Domain Modeling Layer. It is the second layer of the ECD framework. In this layer, the relationships among performances, proficiencies, features of task situation and features of work are mapped out. The conceptualizations in domain modeling that ground the design of operational assessments can be continually extended and refined as knowledge is acquired in prototypes, field trials, and analyses of operational data (Mislevy et al., 2012). Domain modeling structures the outcomes of domain analysis in a form that reflects the structure of an assessment argument, using the external knowledge representations (Mislevy et al., 2010).

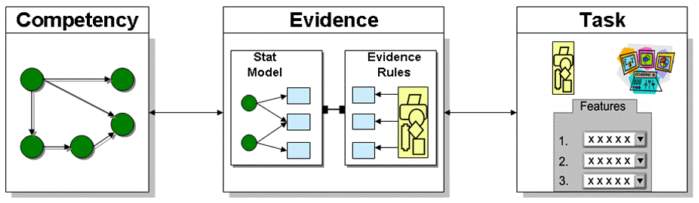

The Conceptual Assessment Framework (CAF) Layer. CAF layer is the nuts and bolts of assessment and responsible for the formal specifications of its operational elements. Information about goals, constraints, and logistics is combined with domain information by designers to create a blueprint for the assessment. The blueprint is created in terms of schemas for tasks, psychometric models, specifications for evaluating students’ work and specifications of the interactions that will be supported. Thus, this layer provides a structure bridging the work from previous two layers and the actual objects and processes that will constitute operational assessment. Blueprint for tasks, evaluation procedures, and statistical models and delivery and operation of the assessment are provided by the objects and specifications of the models from this layer as depicted in the Figure 2.

What Are We Measuring? What collection of knowledge and skills should be assessed? Student/Competency model comprises of variables that target the aspects of students’ skill and knowledge, at a grainsize in accordance with the purpose of the assessment. Structure of these variables is used to capture information about the aspects of students’ proficiencies and is a representation of the student’s knowledge (Conrad, Clarke-Midura, & Klopfer, 2014). It reflects the claims that the assessor wishes to make about the learner at the end of assessment described with a statistical model (Kim et al., 2016).

Where Do We Measure the Competencies? What tasks should elicit those behaviors that comprise the evidence? A task model describes the task and environment features. Specification of the cognitive artifacts, affordances needed to support the student’s activity and the forms in which students’ performances will be captured are the key design elements here. It is composed of the scenarios that can draw out the evidence to update the student model. Tasks usually corresponds to quests or missions in games whose main purpose is to elicit the observable evidence about the unobservable competencies (V. J. Shute & Spector, 2008). In some games, tasks corresponds to game level, where the game play is divided into levels although it may sometimes be challenging to define the task boundary (Kim et al., 2016).

How Do We Measure the Competencies? What behaviors or performances should reveal those constructs? Student model and task model are bridged by the evidence model. It governs the updating of student model, given the results if student’s work on tasks (Conrad et al., 2014). In other words, it answers the question about why and how the observations in a given task constitute evidence about the student model variables (V. J. Shute & Spector, 2008). It further has two components: evidence identification component and statistical component. Evidence identification component provides the rationale and specifications for identifying and evaluating the salient aspects of work products, which will be expressed as values of observable variables. Statistical component is responsible for synthesizing the data generated in the evaluation component across tasks. It could be as simple as summing the percentage correct score or could be more complicated like a Bayesian network.

Assessment Implementation Layer. It is the fourth layer of the ECD framework where practitioners create functioning realization of the models created in the previous layer. Model fit data is checked using the field test data, which is also used to estimate the parameters of the operational system. Tasks and parameters follow the data structures specified in the CAF model.

Assessment Delivery Layer. It is the fifth layer of the ECD framework, in which students interact with the tasks, evaluated based on their performance, and feedback and reports are generated. It could be carried out by computers or human.

Game Based Assessment

In order to discuss game based learning and whether or not it is truly effective it is necessary to discuss assessment, both within the games and assessment of the game itself. That is, it is important to be able to evaluate student progress and the ability of a serious game to meet expected learning outcomes. Serious games for learning are widely recognized as having great potential for educational enhancement (Van Eck, 2006). For this reason it is important to be able to measure the effectiveness of a serious game in terms of learning outcomes. Likewise, the game itself needs to be able to measure the progress of an individual student to report their progress just as it would be reported in a regular classroom. Teachers can, of course, provide their own assessment of student outcomes, but it is important to measure progress and not just outcomes (Shute & Ventura, 2013). Some also view assessment as critical to the growth of serious games, because unless the learning experience can be quantified and validly measured, it is difficult to justify the benefits of serious games over other instructional strategies (Ritterfield et al., 2009). Ritterfield et al. (2009) also point out that there is a lack of qualitative game based assessment tools to validate the effectiveness of serious games and state that it is important to stick to quantitative measures to allow comparisons across multiple games.

Bellotti, Kapralos, Lee, Moreno-Ger and Berta (2013) investigated these issues of assessment in serious games. In order for serious games to be considered a viable learning tool by the larger academic community, there needs to be a means of testing and tracking progress that can be understood from the context of education or training that the game is trying to teach. That is, students who play a game about physics would need to be able to be tested and have their progress evaluated in the same manner as students in a traditional physics classroom environment to ensure that both instructional styles can meet identical learning outcomes (Bellotti et al., 2013). If this cannot be done, it becomes hard to draw comparisons between the two types of learning. Without these comparisons, it becomes difficult or impossible to evaluate the effectiveness of serious games and educators may become unwilling to adopt serious games because of too many unknowns (Ritterfield et al., 2009). The review by Bellotti et al. (2013) did suggest that serious games do increase learning. However, it is important to note that this could not be fairly concluded due to the problems of assessing serious games. It is difficult to do pretest and posttest assessment in this situation because taking the pretest may affect the results (Bellotti et al., 2013). To help solve this problem, the review suggests two areas of further research. The first is characterizing the activities of a player through data mining (Romero & Ventura, 2007). It is necessary to model a user’s actions in terms of learning so that different tasks can be quantified in terms of content and difficulty (Bellotti et al., 2013). This model could be used to build a user profile for the student which could be carried across multiple games. Such a profile would allow educators to assess the student’s overall progress, strengths and weaknesses. Finally, this data could be aggregated to find common mistakes and misconceptions across a group of students or a subject.

The second area of research suggested by Bellotti et al. (2013) was improvement of how assessment is integrated into the game itself. This is an issue that Shute and Ventura (2013) explored as well. They criticized the American education system overall saying that there is a great deal of room for improvement in the current approach towards student assessment. In particular, standardized summary tests provide little support towards student development. Another part of this issue is that classroom assessment rarely has an effect on student learning, as a student will get a snapshot of their own understanding of the subject, but the class will continue onwards at the same pace with little regard for a single struggling student. A learning game however has the potential to adjust the pace and difficulty to help a struggling student or challenge a student doing notably well at the lesson. However, this requires the game to assess performance on a task-by-task basis and game assessment is typically done after the fact (Shute & Ke, 2012). Flexible difficulty in learning is not possible with assessments done afterwards, so it is critical to integrate continuous assessment into the actual game.

Shute and Ventura (2013) state that in-game assessment should be not only valid and reliable, but also it should be unobtrusive to promote player engagement. They refer to such assessment as stealth assessment (Shute, 2011). Assessment in this form is completely integrated into the gameplay of the game (Shute & Ventura, 2013). Not only are these assessments a full part of the game, but players are likely to not be aware that they are being evaluated as the assessment should be invisible to the player. Players provide evidence of their skill in how they interact with the game, rather than directly answering questions. The way in which they interact with the game-world and perform a given task while reaching a specific outcome can be analyzed for the desired learning gains. A physics game for example can consider if a player is able to complete a golf shot correctly by looking at not only the angles they use and whether the ball reaches its target, but also how long it took the player to determine how hard to strike the ball and if they looked for extra help or hints. This allows assessment of player skill without breaking engagement by having a quiz or other obtrusive assessment method appear on the screen (Shute & Ventura, 2013).

Assessment of serious games and player progress within them is an important issue to keep in mind when discussing the effectiveness of learning games (Bellotti et al., 2013). Without a commonly accepted model as a way to assess the effectiveness of games compared to traditional learning methods, it is hard to quantify the results of such tests.

Quantitative Assessment of Serious Games

While there are numerous problems with assessment of serious games as have been discussed (Bellotti et al., 2013; Ritterfield et al., 2009; Shute & Ventura, 2013), there have been attempts to quantitatively assess learning gains from educational games. One example was when Eseryel, Ge, Ifenthaler and Law (2011) examined a group of ninth graders using an online game to learn various science, technology, engineering and math (STEM) topics and how well the students were able to tackle complex problem solving required by the game. Real world STEM problems are often very difficult for new employees. This is because a college student usually graduates with a great deal of theoretical knowledge, but little experience in applying that knowledge to workplace problems (Eseryel et al., 2011). A virtual game world presents the opportunity to make interesting and complex problems for students, and online games allow students to work together as they would on a team at work. Eseryel et al. (2011) performed two studies with these students. The first study was to determine if students who used the game showed higher learning gains than those who received traditional teaching in STEM topics. The three dependent variables by which learning gains were measured were problem representation, generating solutions and structural knowledge. The way students were assessed on problem representation was a rubric based off of critical factors that were identified by experts. The generating solutions score was determined based of off four criteria regarding how well a student explained and provided support for their solution. Finally, structural knowledge was graded by software that compared both the solution and the student’s approach to the problem to that of an expert. Eseryel et al.’s (2011) means of measuring learning gains is notable due to the lack of sufficient serious game assessment methods found to be an issue throughout the general literature. The results of the problem representation and generating solutions scores do suggest that the students had significant positive changes in their complex problem solving skills as a result of playing the game. It is important to note however, that performance on test tasks decreased from pretest to posttest in both groups. In terms of structural knowledge, student scores again showed that students who played the game developed a more complex understanding and mental representation of a problem. However, these representations were riddled with new misconceptions. Together, these results show that while games may increase conceptual learning, it is important to provide cognitive regulation and to scaffold the learning to help ensure students are building appropriate and accurate mental representations of the problems. Eseryel et al. (2011) validated this with a follow up study done a year later with a new set of ninth graders at the same school. In this study, both groups went through a three week training course on system dynamics modeling. Other than this training, this study was identical to the first study. Students in the control group improved their scores from pretest to posttest on structural knowledge while the game learning group had a slight decline in the same area. Overall however, both groups in the second study showed a great increase in complex problem solving skill compared to the groups from the first study. This showed the importance of cognitive regulation, even if the game group did not perform as well as the traditional learning group. Despite this, Eseryel et al. (2011) argue that cognitive regulation should be a consideration when designing serious games. Building such regulation and modeling strategies into the game itself can help students avoid the misconceptions they developed in the first study and help them do better overall as they did in the second study. A student’s mental model of a problem also needs to be assessed at various points throughout the problem to catch and correct these mistakes as quickly as possible. Such assessment would work best as stealth assessment built into the problem itself.

Another study done by Juan and Chao (2015) also measured the learning gains from a serious game about designing green energy buildings. Their study was based on the attention, relevance, confidence and satisfaction (ARCS) model of motivation (Keller, 1987). This model holds the four factors after which it is named as being the key factors in learning motivation (Reigeluth, 2013). This model differs from the previously discussed intrinsic and extrinsic model in that it is only concerned with motivation to learn. By asking participants to rate their feelings on each of these factors, the study by Juan and Chao (2015) was able to measure not only learning gains but also learning motivation. This study examined two groups of architectural students, one of which received traditional instruction and the other played a board game about developing a sustainable and environmentally friendly city. This board game was designed using the ARCS model as a base for instructional strategy. Students in the game based group showed increased learning motivation compared to the control group. The game based group also scored significantly better on the posttest indicating that they learned much more than the control group. One limitation of these results however is that while students learned more and were more motivated by the game many of them also listed numerous improvements that needed to be made to the game, such as more interactive mechanisms between players. Many knowledge domains are continuously changing as new advances are made, and timely updates are needed to any serious game teaching that domain. Such updates and tweaks are more difficult in terms of materials and cost with a physical board game, as was used in this study, compared to a game played on the computer. For this reason, Juan and Chao (2015) were not able to perform a follow up with the improvements implemented. However, their results show that game based learning can be an improvement over traditional methods, and be more motiving to students.

Integration of, and Barriers to, Game Based Learning in the Classroom

With the rise of interest in serious games for learning, it is notable that games are not a large part of many learning curricula. This is because while interest in the use of games may be high, integrating games into a learning environment can be very challenging for educators (Blanco et al., 2012). There are many problems schools face when trying to incorporate games into the classroom, but two key issues stand out. The first of these are problems that are beyond the control of the teachers themselves, such as securing funding for acquiring and maintaining adequate computers for the students. Secondly, there is the issue of games needing to meet educational standards. Learning games are created with the sole objective of improving educational outcomes for the players. The majority of these learning games are developed by game designers whose standards do not match the desired learning outcomes of the schools. The main reason for this is that game mechanics are not naturally created as instruments to promote and assess learning, but focus the interaction to promote fun. This causes a disconnect between the standards of the game and those of the teacher, district and state in which the student is learning. Facilitating smoother integration into the classroom is essential if serious games are to become a key portion of the classroom. Potential methods and strategies for doing this are discussed in the proposed research section later in this paper.

Blanco et al. (2012) proposed a framework to help educators integrate games into their work flow by providing three key tools. The first tool is a method of assessing student progress. This assessment is a combination of four values. The first is a global score, which is a combined rating for all in game tasks. The second is whether or not the player completed the game and the third and fourth are total times spent in the game including time with the game paused and time spent actively playing the game. Having this rubric for assessing student achievement is important for educators trying to include games in their classroom work flow. The second tool from the framework was adapting the learning experience during play to meet the needs of each individual student. This potential for serious games was discussed by Shute and Ventura (2013). The final tool from Blanco et al. (2012) framework was a call for educators to share successful integration methods, techniques and curricula with each other. The root of the integration problem is that educators are unsure of how to go about it. If those who are successful share their ideas, educators can come up with their own framework for how to do this integration with as few issues as possible in order to bring games into more classrooms.

One area within education that has been particularly resistant to integrating games is the medical training field (Eitel & Steiner, 1999). The medical field in general is highly resistant to changes in training methods. Medical students will be expected to save lives, and medical educators are opposed to switching from training methods that have proven effective in the past (Eitel & Steiner, 1999). There is certainly a great deal at stake for training students effectively in this field, but it is important for training methods to adapt to changes in pedagogy in the wider field of education. Eitel and Steiner (1999) again point to a lack of a uniform method of assessing serious games as a source of the reluctance to adopt games into medical training. One problem they note with studies on the effectiveness of serious games is that due to the complexity of the issue, experimental design becomes difficult particularly when trying to gather quantitative data, as the learning outcomes should be quantifiable, measurable and concrete rather than abstract (Ritterfield et al., 2009). Since most data is then qualitative and it is difficult to perform an unbiased field study in this area, the results are either weak or highly controversial. An approach Eitel and Steiner (1999) suggest to address this issue is using evidence-based learning to help quantify learning. In terms of course design the key features of evidence based learning are evaluation of empirical evidence, supporting intrinsic motivation through course design and a standard learning format which can serve as a base for comparing changes in learning quality. This use of a standard format for learning is of note here. The lack of a universal tool by which to assess serious games has been a problem encountered frequently throughout the discussed literature reviewed here.

Stealth assessment

Formative and summative are the two main types of assessment. Formative occurs during the learning process, while summative occurs after the learning has taken place. Formative ones evaluate the learner’s strength and weakness and helps the educators in tailoring their practice accordingly while summative ones are high stakes and provide feedback to the learners. Formative assessments generate data which is critical to high quality teaching. They are powerful but highly resource-intensive teaching tool (West & Bleiberg, 2013).

As mentioned in the sections above, stealth assessment is a ubiquitous and unobtrusive assessment technique discretely embedded deeply within the game (V. J. Shute & Wang, 2015), utilizing the copious amounts of data generated during the game. It helps educators in addressing the concerns prior to the actual assessment of the students (Arnab et al., 2015) and reduce learner’s test anxiety, while maintaining validity and reliability (V. J. Shute et al., 2010). The assessment is usually done using an evidence-centered design approach which is a core component of stealth assessment, allowing effective monitoring of the student’s level of achievement and competencies and supporting (Crisp, 2014). ECD supports embedding the diagnostic, summative and formative assessments holistically into the student’s journey throughout the game, with main disadvantage being the associated high cost for implementing full-scale model. A more appropriate approach is to adapt the design framework of ECD than the full implementation, with key elements being the student model, task model and evidence model. One of the key features of this assessment is that it will not interrupt the participant flow during the activity, unlike the survey questionnaires and other modes of assessments, and it doesn’t rely on the learner’s perception or memory of the learning task (Jacovina, Snow, Dai, & Mcnamara, 2012). Stealth assessment also saves learner’s valuable time, as these measures are woven into the fabric of the game play and do not require extra time allocation, while ensuring engagement (Baron, 2017). In traditional teaching, the flow is usually interrupted for assessment followed by a delayed feedback which is of not much usage as the new learning has already started by that time (Crisp, 2014). This is one of the main goal of stealth assessment, to eventually blur the line between learning and assessment (Moreno-Ger, Martinez-Ortiz, Freire, Manero, & Fernandez-Manjon, 2015). Moreover, in traditional tests, each question has a unique answer, while in a game setting the sequence of events are highly dependent on each other. Also, in traditional tests the questions are designed to measure a piece of knowledge: one question – one fact. But an in-game assessment lets you analyze a series of actions, helping infer the learners know-how at any point of time (Shute, Ventura, Small, & Goldberg, 2014).

One of the way to create a stealth assessment is using the log data from the game play. However, it would be a summative assessment and not a formative one and may neglect important changes that occur during the gameplay (Eseryel, Ifenthaler, & Ge, 2011). Did the learner not understand the task? Was the task too difficult? Was he or she too excited? Was it a matter of motivation? In order to have a formative assessment, the learner’s interactions during the game play should be used to generate the assessment. Shute and colleagues (2006) developed stealth assessment in a physics game called Newton’s Playground which is based on the Newton’s three laws of motion. The problems in the game required the player to use multiple agents of force and motion in the solution to advance to the next level. This game assessed the players of their physics knowledge and persistence. A successful player would exhibit the concepts of torque, linear momentum, angular momentum, levers, potential energy, kinetic energy, and all sorts of physics principles. Persistence was gauged by the amount of time a player spends trying to solve a difficult problem in the face of failure before giving up. There are a number of other games created by Shute and colleagues with an embedded stealth assessment measuring various types of constructs such as creativity (Kim & Shute, 2015).

Some ethical concerns may arise regarding the stealth assessment if they are to move into wider acceptance, that whether they are fair or not. If the students are aware that they are being assessed, then it might influence their behavior. We might be deceiving them if we assess them without their prior knowledge (Walker & Engelhard, 2014).

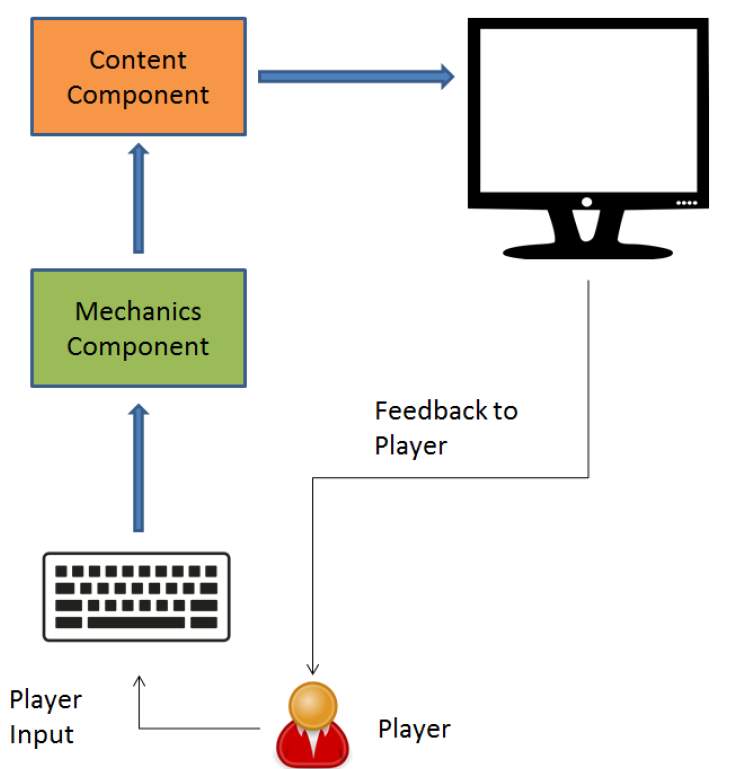

Content Agnostic Game Engineering (CAGE)

Usually the game mechanics are strongly tied to the educational content being taught by the game, which renders the programming code of the game unusable for further development. Therefore, it requires a major overhaul of the program for future projects, and often the code is discarded as starting over is more cost and time efficient. CAGE is a model for designing educational games which alleviates this thing by separating the game mechanics from the educational content of the game. It follows a design first approach and speeds up the development process (Baron, 2017). CAGE model for the game based learning is shown in Figure 3. In this, the player inputs the commands via keyboard or mouse which are passed to the hardware translating it into in-game actions. Example: The player presses the S key, moving their game character backward. This action is passed to the content component, possibly evaluating the action. Content component on evaluating the action as right or wrong, passes the result of assessment along the loop where it appears as feedback to the player. Player upon seeing the feedback, acts accordingly while incorporating the feedback into the next action.

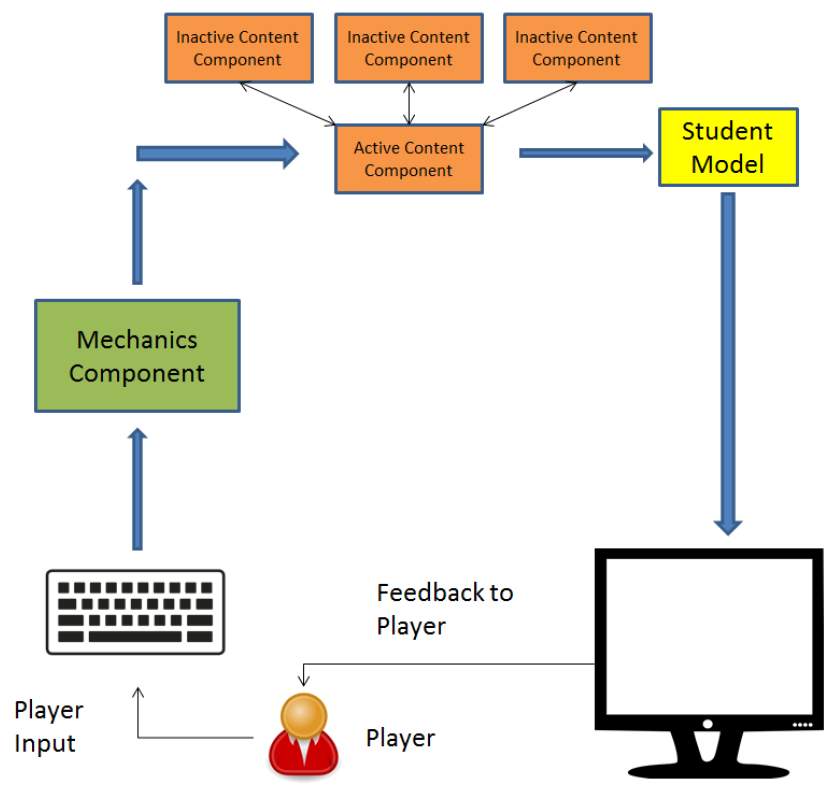

The CAGE model in Figure 4 is like the one shown in Figure 3 except a few changes. It follows the evidence-centered design approach of assessment. A new step for student model is added in the loop which will be updated with every student action, but need not be changed for different contents of the game. Content component is the part which will be switched. CAGE has several content component instead of one, but only one of them will be active at a time.

Baron (2017) found that the CAGE framework led to the reduction of number of lines of code and hours spent from the first game to the second game creation. He found that the developing of the second game took one third of the code and less than half the time on a average compared to the first game component.

Proposed Research

There are several research areas surrounding serious games that merit further exploration. There are three main research questions that we propose for further work. The first is what are the core design considerations for implementing stealth assessment in a way which is easier for researchers to use? The second is, how the video games can be used to assess the key skills needed in the 21st century? Finally, how to embed the stealth assessment in a CAGE framework enabling the games to be seamlessly embedded in a classroom to teach any kind of subject?

Video Games usually require various skills and competencies in order to succeed, and most of these skills are valuable in this century. For example, strategy-based games like Age of Empires requires player to use multi-tasking. Players need to work on multiples tasks at hand to keep up in the game. We will identify those key skills and how can we use video games to assess them.

Shute and her colleagues have done tremendous research regarding stealth assessment. They mostly used log files which are a mode of summative assessment. We plan to use stealth assessment for formative assessment, embedding the feedback loop within the game play. The game will adjust its difficulty and make minor content adjustments to adapt itself to the learner, for a better quality of learning. Further, these log files are inundated with data, causing a big data problem. We will work on the ways to present the useful data to the researcher in a best possible and friendliest way.

Finally, there is the matter of making context agnostic games with embedded stealth assessment. Hayes (2005) conducted an expanded literature review which drew conclusions that echoed previous concerns about generalizable assessment of serious games. That review also defined agnostic games as games that have competition as a main feature and players must match the skill level of their opponents, be they real people or computer controlled characters. This central competition mechanic is domain independent as it can be repurposed to fit with different domain topics. Repurposing of educational content is already a very frequent activity in academics as educators attempt to reuse existing materials for slightly different purposes (Protopsaltis et al., 2011). The learning objectives of a serious game are usually designed with a specific teaching method in mind. Therefore, when repurposing serious games to be general use for different knowledge domains, it is important to reevaluate these learning objectives. It is also important to investigate the pedagogical approach used in the game, as well designed serious games will have pedagogical principals and approaches integrated into them. More recent approaches have tended toward experiential learning and older serious games may be based on Problem Based Learning. This means that repurposing would take some careful work to rebuild the core approach the game takes towards learning. The type of serious game that is based on experiential learning is called an epistemic game (Rupp et al., 2010). Such games are designed to develop domain specific expertise by placing players within a content rich environment. Further research is needed to address how to repurpose epistemic games to ensure that they can be content agnostic (Hayes, 2005; Protopsaltis et al., 2011; Rupp et al., 2010) while embedding stealth assessment in it. Such games could provide a universal model to build content agnostic games that can be used in a regular classroom to teach any kind of content.

Conclusion

Despite being a relatively new technology, there has already been a great deal of work done concerning the use and potential of serious games. Serious games are more motivating to many students than traditional learning methods (Juan & Chao, 2015) and yet serious games have yet to gain a strong foothold in the classroom. Many teachers remain reluctant to adopt serious games and integrate them into their curriculum and there are multiple barriers that make such integration difficult (Blanco et al., 2012). The volume of literature however, makes it clear that there is a distinct interest within the educational community to make serious games a larger part of instruction as time goes on and pedagogical methods continue to evolve with technology (Eitel & Steiner, 1999). Predictions also forecast that a large percentage of businesses will integrate serious games in some form into their training structure (Susi et al., 2007). While serious games are moving slowly into education and work place training, it is clear that the serious games industry is not going to fade out or collapse anytime in the foreseeable future.

There is a clear gap in literature in regard to assessment of serious games. There has been some work on assessment of student progress and learning gains within the games themselves (Shute, 2013; Eseryel, Ge, Ifenthaler & Law, 2011), but how to assess the games themselves remains a persistent issue (Bellotti et al., 2013). Some attempts have been made to evaluate the effectiveness of serious games (Eseryel et al., 2011), but there is still no commonly accepted methodology for doing so. Until such a methodology is developed, individual attempts to assess the effectiveness of serious games will face validity challenges. This makes assessment of serious games an area for further work by researchers. Ideally, further work would produce a framework of assessment tools which can be applied universally to serious games from any field. The goal would be to produce quantitative rather than qualitative data regarding serious games (Eitel & Steiner, 1999; Bellotti et al., 2013). There are many complexities involved when trying to assess what are often intangible measures (Bellotti et al., 2013), and further research will need to address these. Assessment of serious games presents a rich, but complicated, area for further work.

TABLES

Table 1. Layers of Evidence-Centered Design

| Layer | Role | Key entities | Selected external knowledge

representations |

| Domain analysis | Gather substantive information about the domain of interest that has direct implications for assessment: how knowledge is constructed, acquired, used, and communicated. Domain | Domain concepts, terminology, tools, knowledge representations, analyses, situations of use, patterns of interaction. | Content standards, concept maps (e.g., Atlas of Science Literacy, American Association for the Advancement of Science, 2001). Representational forms and symbol systems of domain of interest, e.g., maps, algebraic notation, computer interfaces. |

| Domain modeling | Express assessment argument in narrative form based on information from domain analysis. | Knowledge, skills and abilities; characteristic and variable task features, potential work products and observations. | Assessment argument diagrams, design patterns, content-by- process matrices. |

| Conceptual assessment framework | Express assessment argument in structures and specifications for tasks and tests, evaluation procedures, measurement models. | Student, evidence, and task models; student model, observable, and task model variables; rubrics; measurement models; test assembly specifications. | Test specifications; algebraic and graphical External Knowledge Representations of measurement models; task template; item generation models; generic rubrics; automated scoring code. |

| Assessment implementation | Implement assessment, including presentation- ready tasks, scoring guides or automated evaluation procedures, and calibrated measurement models. | Task materials (including all materials, tools, affordances); pilot test data for honing evaluation procedures and fitting measurement models. | Coded algorithms to render tasks, interact with examinees, evaluate work products; tasks as displayed; ASCII files of parameters. |

| Assessment delivery | Coordinate interactions of students and tasks: task-level and test-level scoring; reporting. | Tasks as presented; work products as created; scores as evaluated. | Renderings of materials; numerical and graphical score summaries. |

FIGURES

Figure 1. Layers in the evidence-centered assessment design framework.

Figure 2. The central models of the conceptual assessment framework.

Figure 3. CAGE Model.

Figure 4. CAGE Model for educational games using ECD.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Teaching"

Teaching is a profession whereby a teacher will help students to develop their knowledge, skills, and understanding of a certain topic. A teacher will communicate their own knowledge of a subject to their students, and support them in gaining a comprehensive understanding of that subject.

Related Articles

DMCA / Removal Request

If you are the original writer of this literature review and no longer wish to have your work published on the UKDiss.com website then please: